Introduction:

Unmanned aerial systems can be used to take data from a mine and to calculate volumes of stock piles using advanced software. This lab goes over this process for sand piles at the Litchfield mine.

Volumetric analysis is important for a company that wants to find out how much material they have removed and have laying in piles in the mine. The implementation of UAS can be key to getting extremely accurate figures in a very short amount of time. This lab will go over using Pix4D to get these figures as well as doing it in ArcMap.

There are multiple tools in Arcmap that will be used during this process, they are:

-Extract By Mask: The extract by mask tool is used to clip a raster using a shapefile or other data format to create a clipped section of a raster.

-Surface Volume: The surface volume tool is used to calculate the volume of a DSM based on the height that the user provides it.

-Raster to TIN: Raster to tin converts a raster file into a TIN file.

-Add Surface Information: The add surface information tool adds information to a file. In the case of this lab it adds the surface information to the TIN from pile shapefile.

-Polygon Volume: The polygon volume tool calculates the volume of a polygon. In this case it calculated the volume of the TIN file of each pile.

Methods:

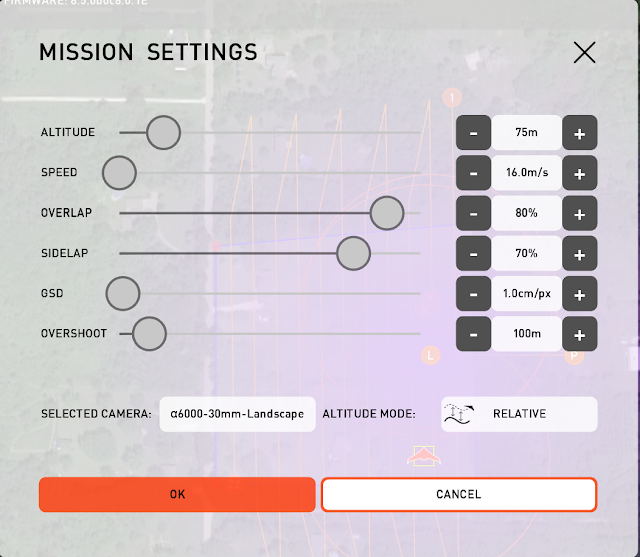

Pix4D was used to process the imagery in a previous lab, for further details a previous blog post,

Building Maps with Pix4D, can be examined. The already processed data was brought into Pix4D to begin the process of volumetrics. Pix4D has a built in volumes tab that makes the process very user friendly. The user clicks the volumes tab, then clicks the cylinder shaped tool on the top of the page and is asked to trace the outline of the pile to be examined. This was done for three seperate piles at the litchfield mine site. The user then clicks the compute button and Pix4D automatically calculates the volumes of the piles. The resulting figures can be seen on the left of the image below.

To be able to calculate volumetrics in Arcmap is to extract the piles from Pix4D as a shape file to be used in Arcmap. This was done by using the extract button on the top of the volumes work column and saved into an appropriate folder. The resulting shapefiles were then used to clip the data from the piles on the Digital Surface Model in ArcMap. The Extract by Mask Tool was used for this. The DSM was the input, and the pile shapefile was used as the feature mask data. The result was clipped rasters of the separate piles.

The next step was to use the tool surface volume to find out the volumes of each of the piles. The clipped raster file was used as the input, a text file output was created, the reference plane was set to above to find the volume above a certain height, the identify tool was used to find the base height, which was then entered as the plane height and the tool was ran. This resulted in a table that provided the volume of the pile.

The following model illustrates the steps used to find the volumes of the individual piles.

The next process was to turn the rasters into TIN files to find the pile volumes. The first tool used was raster to tin. For this tool the clipped pile raster file was inputted and the default parameters were left and the tool was ran. The raster to tin tool did not add surface information to the TIN file. The Add Surface Information tool needs to be ran next to add the surface information from the original clipped surface file added from Pix4D to the TIN. The original clipped shapefile is used as the input, the TIN is the file getting the surface information and the Z minimum will be created.

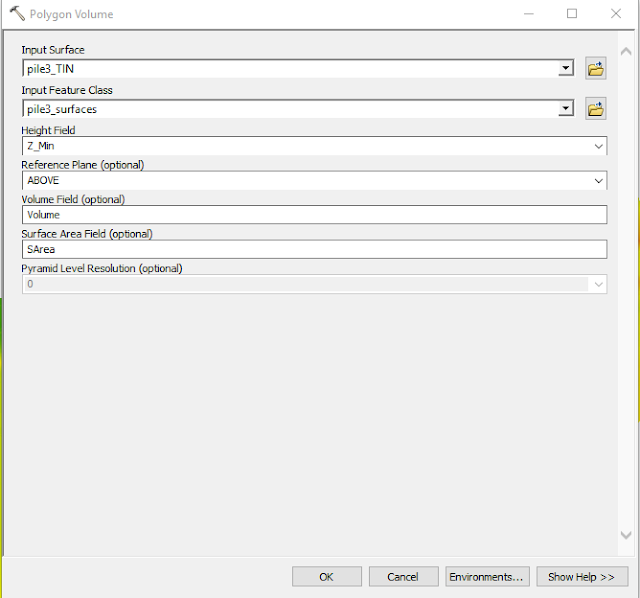

Now that the TIN file has surface information it is ready to be used to find the pile volume. To do this the Polygon Volume tool was used. The TIN was the surface input, pile surfaces was the input feature class, Z_Min. field that was just created was used as the height field, and the reference plane was set to find the volume of the surface above the Z_Min field. The tool was ran and a output volume was added to the surface shapefile of each pile

The model below illustrates the workflow for measuring volumetrics in ArcMap by creating a tin from a DSM then using the polygon volume tool.

The volumes of the three piles were now calculated using Pix4D, the Surface Volume tool in ArcMap and the Polygon Volume tool in Arcmap. Now the data needs to be put together and presented in a organized manner.

Discussion:

Three different methods were used to compute volumetrics of three different stock piles in the Litchfield mine. The results for each pile varied slightly for each different method.

The resulting volumes for each pile were slightly different for each method. The volume for each pile was the lowest for the Pix4D volume. This could be because it was hard to get a completely accurate trace of the pile or the way the program calculates it. The surface volume tool had volumes right in the middle for piles 2 and 3 and had the highest volume for pile 1. The surface volume tool might not be the most accurate because the user is providing the base level on which the tool bases the measurement off and that could skew the data. The polygon volume tool had the highest volumes for the second and third piles and the middle value for the first pile. This was should be the most accurate because it is using the surface information to measure the lowest point then taking the volume of everything above that.

Conclusion:

Using UAS to for stock pile volumetrics has huge advantages. Volumetrics for a lot of data can be completed in a much shorter time than it would take the traditional way. One person could do it for a whole mine where as the process would require more time and expenses to do it by hand. There are multiple ways to do it and each one of them has their advantages. Pix4D can give a relatively accurate measure of volume by just outlining the stock pile. The tools in ArcMap give a more accurate measure but take more time and more knowledge by the user.